Why LLMs Fall Short for Analytics: How MachEye Transcends Limitations with SearchAI

by Ramesh Panuganty, Founder & CEO

Why LLMs Fall Short for Analytics: How MachEye Transcends Limitations with SearchAI

by Ramesh Panuganty, Founder & CEO

When generative AI and Large Language Models (LLMs) gained popularity, many organizations jumped the bandwagon to try various business use cases. While LLMs have found some useful applications in information retrieval and content generation, they cannot serve the analytics requirements of organizations. We at MachEye , built our core engineering for our AI-Powered Analytics platform from the grounds up. By creating our custom language models, we have been servicing our customers’ analytics needs with intelligent search, actionable insights, and interactive audio-visuals.

Let’s discuss why a large language model cannot serve an analytics use-case for enterprise data by nature. I’ll also reveal how we strengthened and improved MachEye to dramatically enhance the impact of LLMs throughout a customer organization like never before.

Why are LLMs not a good fit for analytics use-cases?

As impressive as they are, leveraging LLMs in a business context with real-world data sets demands on the right application and associated guardrails. It might appear that LLMs hold immense potential to transform business analytics but understand that LLMs have no plumbing to become a complete solution. These are seven major limitations that become apparent if one explores how LLMs handle complex business data.

1. Inaccurate search results

If you attempt to use LLM as a search engine on your SQL data, you will find that they would struggle to interpret natural language queries, queries on multiple attributes, intricate data, compute joins on multiple tables, and perform advanced date functions accurately.

For example, if an LLM gives an answer for “city with most sales” as “Glendale” – the first read might make you think that it is a good answer. But a true analytical system would go a step further to add a group-by of state (and country, if applicable) to avoid multiple “Glendale” across different states from getting aggregated. The correct answer in this case might be “San Jose, California”.

2. Difficulty in Differentiating SQL Across Data Sources

Not all data sources (like BigQuery, Snowflake, RedShift etc.) have the same SQL. It takes years of experience to build the right SQL for a given data source. Relying on LLM-generated SQL code for correctness of all your search queries is definitely going to introduce complexity and reduce user trust in business analytics.

For example, LLMs will not be able to generate different code snippets as shown

below, customized for the type of data store, to calculate the percentage of

null values in a column.

For Snowflake:

SELECT count(*), SUM(CASE WHEN $attrname$ IS NULL THEN 1.0 ELSE 0.0 END) /

CAST(COUNT(*) as DECIMAL(38,2)) AS nullPercent FROM ( select $attrname$ FROM

$tablename$ FETCH FIRST $rowlimit$ ROWS ONLY)

For BigQuery:

SELECT count(*), ROUND(SUM(CASE WHEN $attrname$ IS NULL THEN 1.0 ELSE 0.0

END) / CAST(COUNT(*) as DECIMAL),2) AS nullPercent FROM (select $attrname$

FROM $tablename$ limit $rowlimit$) as t

For SQL Server:

SELECT count(*), SUM(CASE WHEN $attrname$ IS NULL THEN 1.0 ELSE 0.0 END) /

CAST(COUNT(*) as DECIMAL(38,2)) AS nullPercent FROM (select TOP $rowlimit$

$attrname$ FROM $tablename$) t

It is also possible that the SQL generated by an LLM can be completely wrong. Take this example of an invalid SQL query as generated by ChatGPT, which doesn't even check for a null value.

3. LLMs cannot scale for broader user adoption

Without the appropriate infrastructure, LLMs lack the necessary guardrails to regulate data access, raising concerns about data compliance in every industry due to data sensitivity. The lack of data governance and data encryption policies for enterprise controls means the solution will not be able scalable across users and user groups.

For example, how do you enforce access such that your North American sales team accesses only North American data while the EMEA team accesses only their region-specific data?

With LLMs, such granular customizations are difficult and not scalable.

4. Limited Scope to Go Beyond Search and Answers

LLMs restrict answers to the paradigm of “you only get what you ask for”. However, in analytics, you can’t expect your users to keep asking questions day in and day out. In the life cycle of intelligence, data should lead to answers, insights, causal analysis, and actionable intelligence – all in a single search.

For example, a query of “tell me about the lawn chair sales”, should not only give the $$ value of chair lawn chair sales, and the quantity of sales – but also give insights which target market had the majority sales, other products that are sold together with lawn chairs and finally give a reason of the behavior of an insight such as a marketing campaign that contributed to the most sales increase of this product.

If these are not delivered, LLMs takes data analytics back several generations. Search is the starting point, but it must become optional at some point. Today’s users deserve automated personalized insights as they happen in data and not just when they search.

5. Absence of Direct Metadata Cataloging and Curation

Because there is no data model engineering and catalog generation in LLMs, it is difficult to understand how LLMs curate data. Without transparency and visibility, there are possibilities of hallucinations, biases, and quality issues coming up in the generated answers.

For example, how do you specify that the relationship between students table and courses table is many-to-many, and the relationship between students table and university table is many-to-one only as a conditional join for full-time students.

With analytics platforms, metadata like entity relations is automatically maintained and flexibility is provided to add custom metrics and create computed attributes. However, in LLMs metadata will need to be created and/or configured manually every time new data is added.

6. LLMs lack the application to onboard new data sets and users

Offering a trustworthy analytics application would require an enterprise to build a repeatable process to onboard both the data sets as well as the user base. There is still a lack of transparency in how to manage LLM operations better.

For example, let's say you implemented ChatGPT for a customer engagement analytics use-case. If you needed to include three additional columns, what steps would you follow to configure, deploy, test, and roll out the changes?

With a modern analytics platform like MachEye, a user could have just re-scanned the data source and get these 3 additional columns in the catalog - all done without any downtimes.

7. LLMs force trial & error approach to interactivity

Interactivity does not mean asking repeated questions and getting answers. It is the ability to view answers as different chart types, text narrations, data tables, pivot tables, and the ability to analyze further with drill downs and why analysis. Starting from getting the right answer Selecting the right chart option cannot be done by trial and error.

With LLMs, interactivity of answers is reduced to trial and error in asking questions multiple times and getting them right.

An analytics product should offer more answers/insights/interactivity, with less of user quries for any business scenario.

8. Token-based pricing models can result budget overruns

Building a demo solution with LLM might seem easy, but without careful consideration of the right pricing model, ability to predict chargebacks or revenues, and handling overages, enterprises could face cost overages.

You may know the price of the service as $30 for every 1M tokens, but how do you estimate the average daily usage per user? And how many tokens would be needed for every usage? What would a peak volume be? How do you budget for growth of data size, usage and users over time?

The very likely scenario that an enterprise may face is the adoption of generative AI and ad-hoc search would vary based on user age-group and content relevancy to job nature, making cost predictions challenging. In some cases, it may lead to unexpected bills, potentially soaring expenses.

How MachEye enhances LLMs for analytics?

By integrating LLMs into MachEye's modern analytics platform architecture, we've harnessed the power of LLM analytics, offering a more user-friendly analytics experience that provides 100% accurate answers, insights, generative content, and enterprise-grade data governance and scalability. Our promise was also to deliver analytics that are consumable and actionable, and we've exceeded expectations.

Here is how MachEye's SearchAI works:

- MachEye’s intelligent search uses LLMs to improve the parsing of vocabulary to map to SQL data, coupled with the enterprise governance guardrails, security controls and data observability

- MachEye’s actionable insights uses LLMs to improve on the feedback, relevancy and deduplication

- MachEye’s interactive stories uses LLMs to improve on the text presentation, audio presentations and relevancy

- MachEye’s headlines generate more relevant headlines making the search as an optional feature. Users don’t need to wake up every day just to type a few more questions

- MachEye’s suggested searches uses LLMs to improve on the usability, coupled with our ranking and usage patterns

- MachEye’s low-prep / no-prep onboarding technology uses LLMs to identify the catalog so that the everyday search experience is improved

All of these are coupled with multiple guardrails empowering analysts to curate data enrichment and provide input on AI-generated insights, thereby enhancing the value and trustworthiness of results. A detailed overview of MachEye’s key features:

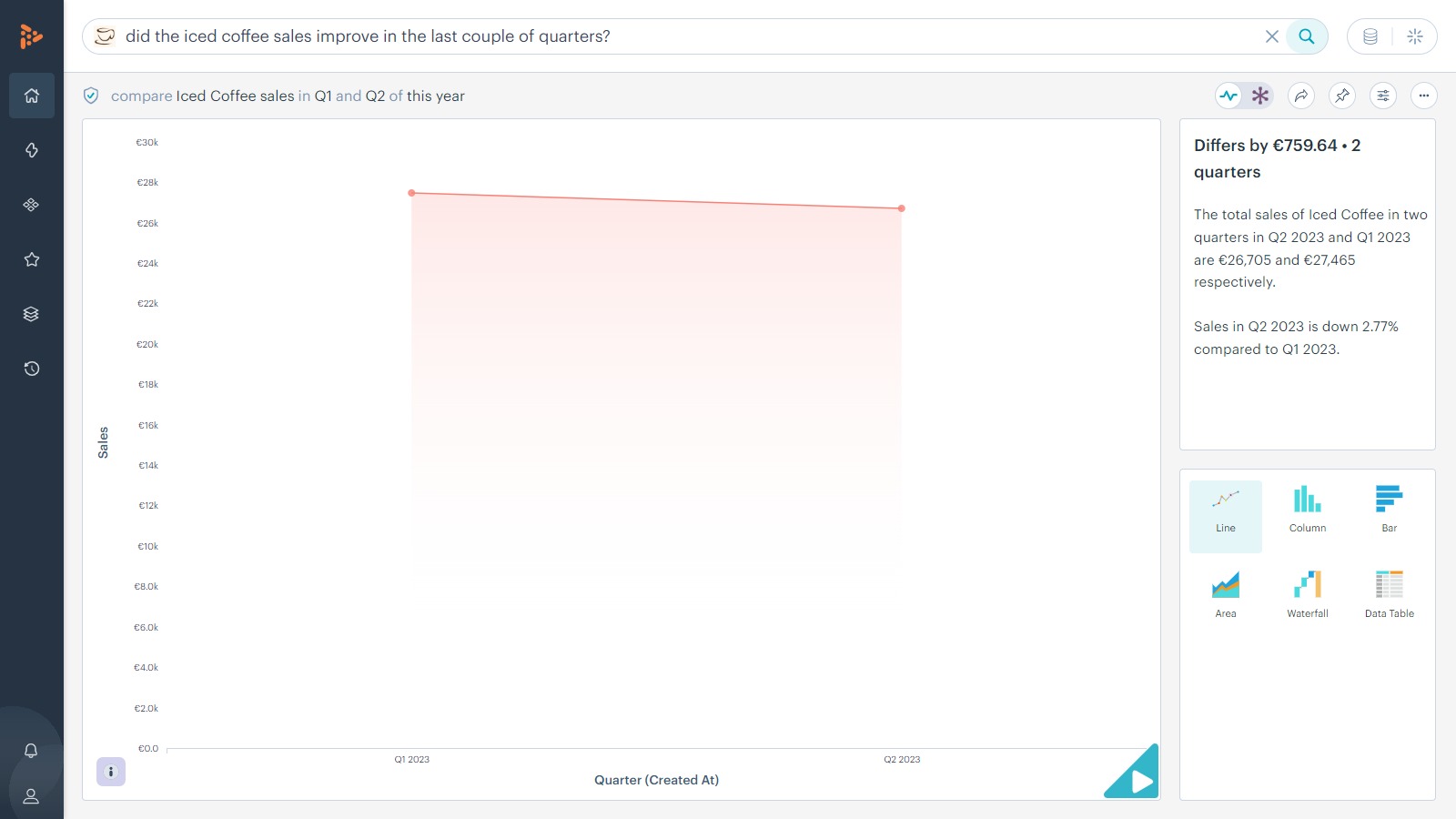

AI-powered search in natural language

MachEye’s AI-powered enterprise search always worked for queries like “how much were the iced coffee sales in Oregon over the last two quarters” with our own language models, and now

a query like “did the iced coffee sales improve in the last couple of quarters?” is also understood.

We are not replacing our existing search technology with LLMs but are using it to improve certain scenarios which cannot be handled in traditional ways.

Users are enabled more than ever to ask questions that would always result in 100% accurate answers with ambiguity.

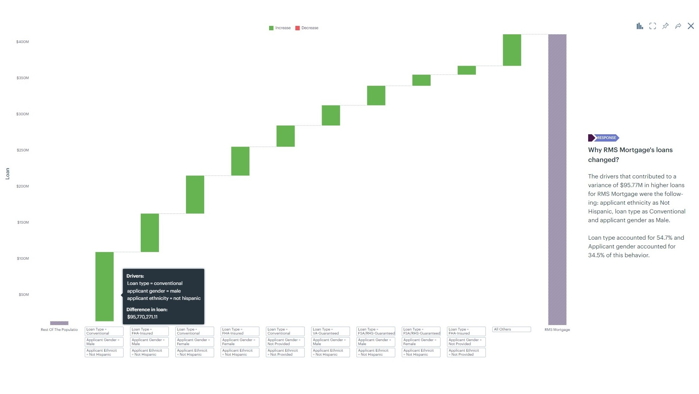

AI-generated insights

Beyond answering the question, MachEye identifies a series of signals that are relevant to the context of the user query and runs the additional dataset with AI models on infrastructure that gets deployed on the fly.

Any discovered insights would go through a series of de-duplication and rank order such that user will see the most business relevant insights presented with best-fit visualizations, text and audio narratives.

This eliminates the scenarios where the users don’t even know what questions to ask. You see one additional way of how we break the paradigm of ‘you only get what you ask for’?

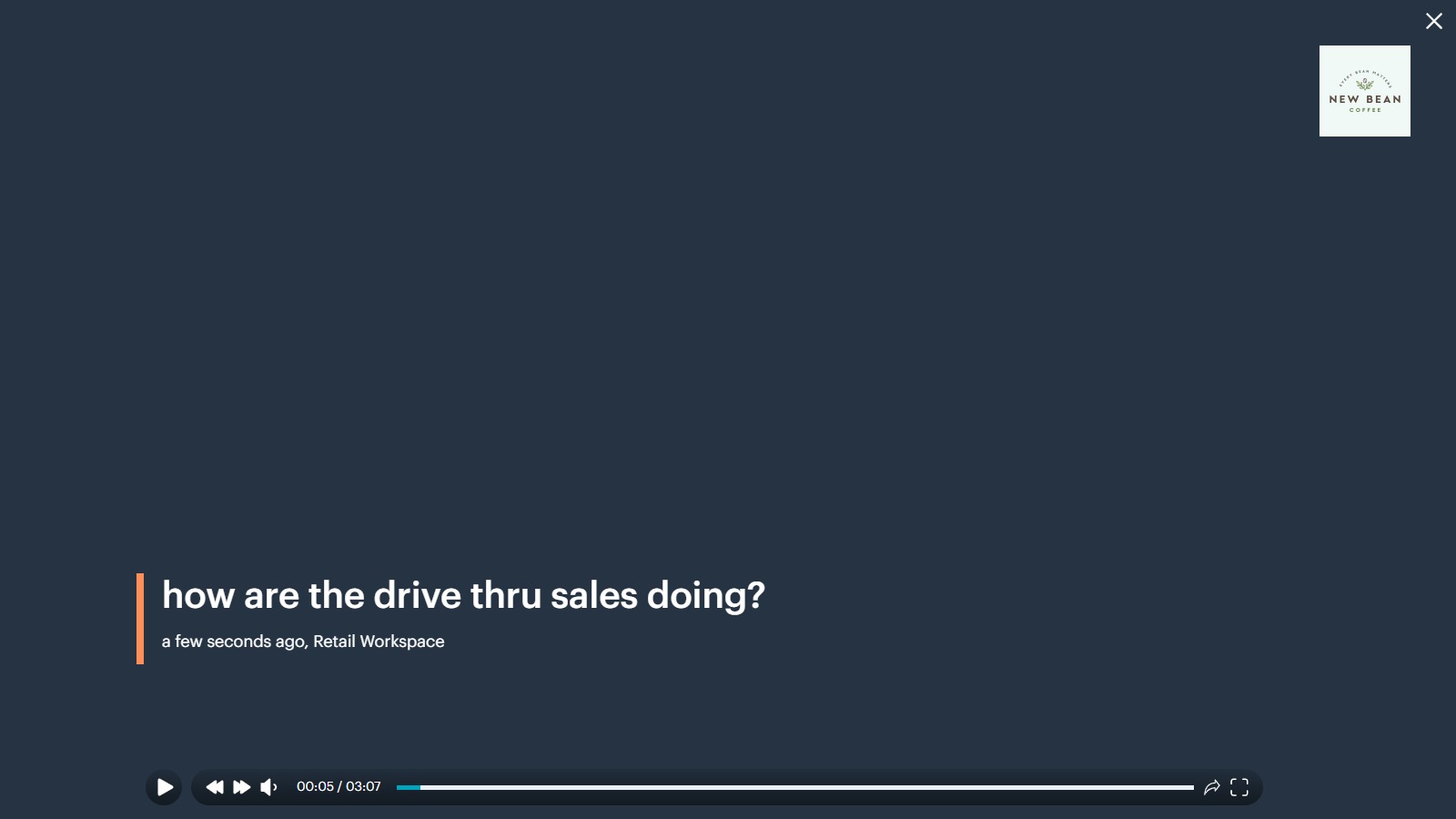

AI-powered interactive presentations & audio-visuals

Delivering the appropriate visualizations along with accompanying text narrations significantly enhances the user experience for enterprise users.

MachEye takes it a step further by presenting answers, insights, and why-analysis in both text and audio formats, resulting in an eight-fold improvement in consumption.

This provides users with an experience akin to having an analyst personally guide them through understanding the insights. Each of your business users will have a dedicated co-pilot to assist them with their everyday analytics needs.

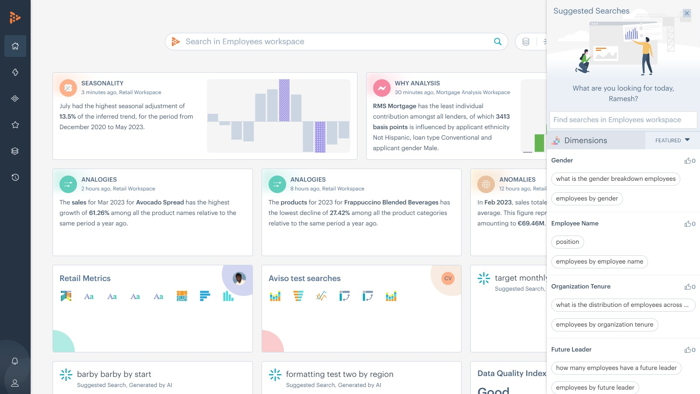

AI-powered suggested searches

MachEye helps you overcome the challenge of starting with an empty search bar with suggested starter questions, guiding your exploration of data.

The suggested searches are generated based on the usage patterns, both active active and passive feedback, and catalog updates so that the suggestions are always personalized and relevant.

As users pick any of the suggested searches, one can easily ask follow-up questions or apply the suggestions in exploring further very easily.

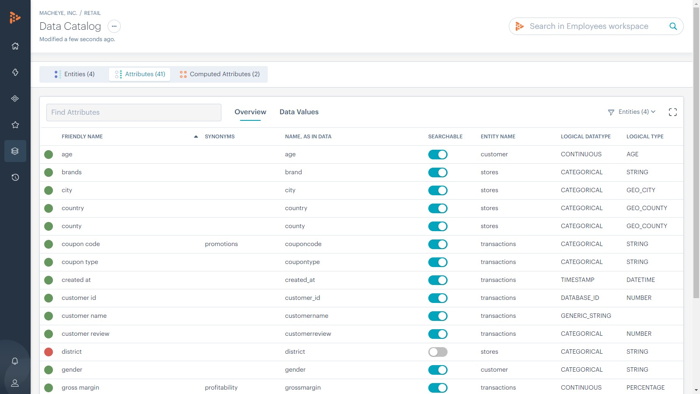

AI-assisted data modeling

MachEye empowers data analysts to create data models that serve the searchable needs of every business user. MachEye always had custom language models to generate synonyms for common business terms like revenue versus sales, but

LLMs help in generating a friendly name for column “value” as “User Rating” which is not possible with traditional technologies and immensely improves consumption of analytics for business users.

The process of bringing human-in-loop to leverage AI for faster and reliable answers, while maintaining the guardrails of enterprise controls, gives immense control and ease of governance.

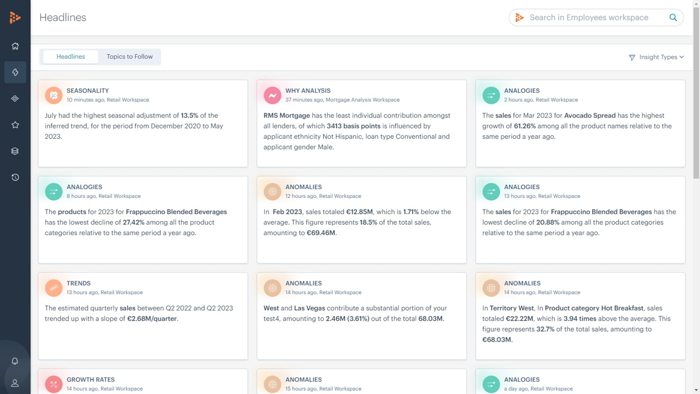

AI-powered headlines

MachEye generates personalized headlines based on user searches, usage patterns, business metrics and catalog that are relevant to every user. This process not only eliminates the need for searches day in and out but makes the entire system smarter for all users.

Imagine a user receiving a headline from MachEye, as an interactive audio-visual, saying “The Iced Coffee sales last week declined by 12.42% in Northern California compared to the prior week, as the marketing campaign X ended two weeks ago.”

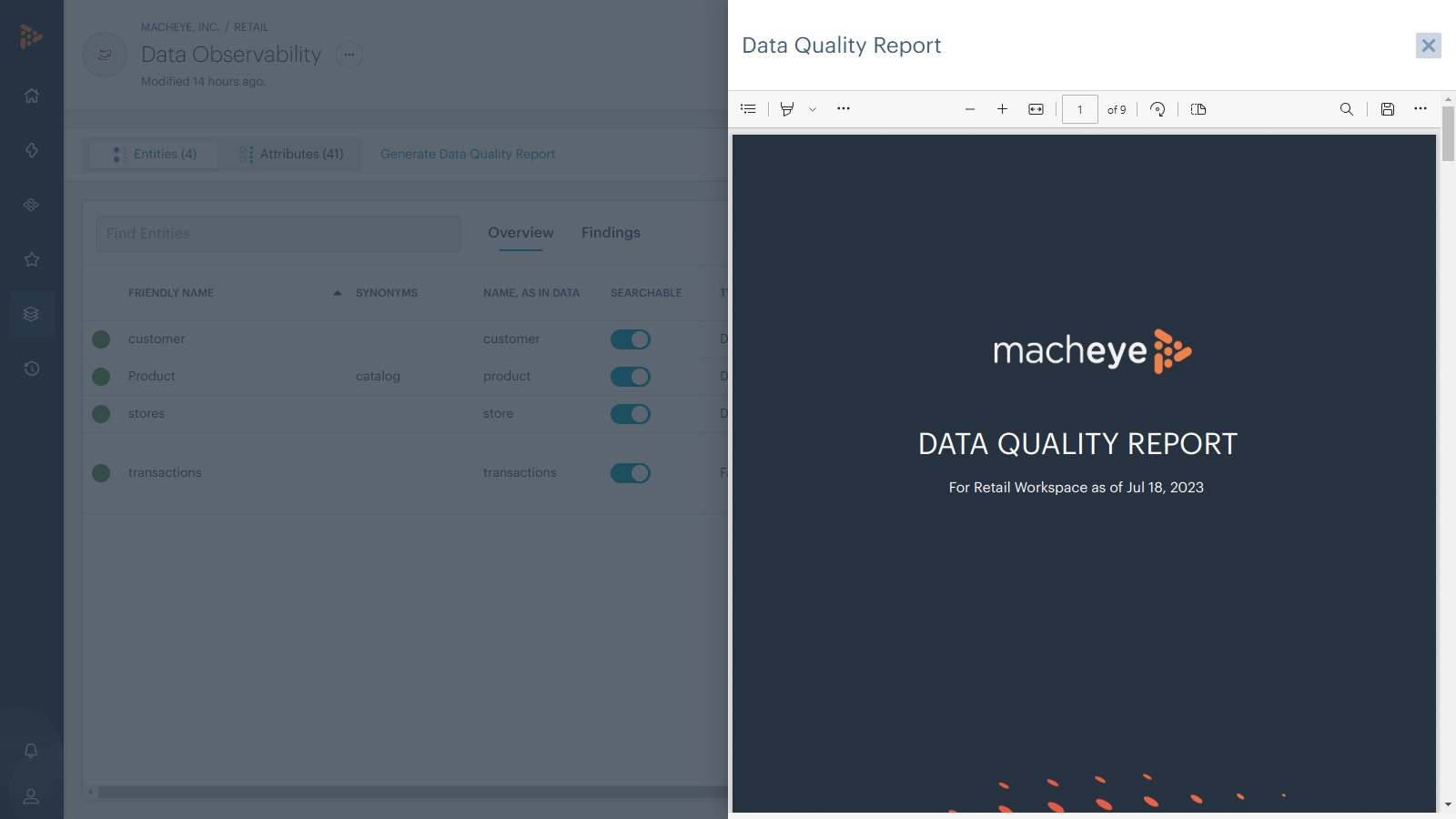

AI-powered data quality engine

MachEye is the only analytics platform that measures the quality of your data on a continuous basis in order to use it for delivering accurate insights.

The data is constantly analyzed using sophisticated AI models, measured across eight key characteristics, and presented in a quantitative manner similar to air quality index.

MachEye assists in enhancing data observability with the intent to enriching the quality of your analytics. This data quality index is highlighted at all levels of your data, ranging from the data store to the attribute level and the AI helps in presenting the improvement opportunities.

How does MachEye leverage LLMs and shape the modern analytics stack?

By harnessing the strengths of our foundational and proven technologies in intelligent search, actionable insights, and interactive audio-visuals, combined with the capabilities of Large Language Models (LLMs), MachEye's AI-powered enterprise search provides answers, insights, why analysis, and interactive presentations. This is all achieved while maintaining 100% accuracy, trust, data governance, and enterprise security. Thanks to human-in-the-loop machine learning, the system continually enhances itself, all while keeping your data securely managed.